YouTube is an American online video-sharing platform headquartered in San Bruno, California. Three former PayPal employees—Chad Hurley, Steve Chen, and Jawed Karim—created the service in February 2005. Google bought the site in November 2006 for US$1.65 billion; YouTube now operates as one of Google's subsidiaries.

YouTube allows users to upload, view, rate, share, add to playlists, report, comment on videos, and subscribe to other users. It offers a wide variety of user-generated and corporate media videos. Available content includes video clips, TV show clips, music videos, short and documentary films, audio recordings, movie trailers, live streams, and other content such as video blogging, short original videos, and educational videos. Most content on YouTube is uploaded by individuals, but media corporations including CBS, the BBC, Vevo, and Hulu offer some of their material via YouTube as part of the YouTube partnership program. Unregistered users can only watch (but not upload) videos on the site, while registered users are also permitted to upload an unlimited number of videos and add comments to videos. Videos that are age-restricted are available only to registered users affirming themselves to be at least 18 years old.

YouTube and selected creators earn advertising revenue from Google AdSense, a program which targets ads according to site content and audience. The vast majority of its videos are free to view, but there are exceptions, including subscription-based premium channels, film rentals, as well as YouTube Music and YouTube Premium, subscription services respectively offering premium and ad-free music streaming, and ad-free access to all content, including exclusive content commissioned from notable personalities. As of February 2017, there were more than 400 hours of content uploaded to YouTube each minute, and one billion hours of content being watched on YouTube every day. As of August 2018, the website is ranked as the second-most popular site in the world, according to Alexa Internet, just behind Google.[2] As of May 2019, more than 500 hours of video content are uploaded to YouTube every minute.[7] Based on reported quarterly advertising revenue, YouTube is estimated to have US$15 billion in annual revenues.

YouTube has faced criticism over aspects of its operations, including its handling of copyrighted content contained within uploaded videos,[8] its recommendation algorithms perpetuating videos that promote conspiracy theories and falsehoods,[9] hosting videos ostensibly targeting children but containing violent or sexually suggestive content involving popular characters,[10] videos of minors attracting pedophilic activities in their comment sections,[11] and fluctuating policies on the types of content that is eligible to be monetized with advertising.[8]

History

Founding and initial growth (2005–2006)

YouTube was founded by Steve Chen, Chad Hurley, and Jawed Karim, who were all early employees of PayPal.[12] Hurley had studied design at Indiana University of Pennsylvania, and Chen and Karim studied computer science together at the University of Illinois at Urbana–Champaign.[13]

Karim said the inspiration for YouTube first came from Janet Jackson's role in the 2004 Super Bowl incident when her breast was exposed during her performance, and later from the 2004 Indian Ocean tsunami. Karim could not easily find video clips of either event online, which led to the idea of a video sharing site.[14] Hurley and Chen said that the original idea for YouTube was a video version of an online dating service, and had been influenced by the website Hot or Not.[15][16] They created posts on Craigslist asking attractive females to upload videos of themselves to YouTube in exchange of an $100 reward.[17] Difficulty in finding enough dating videos led to a change of plans, with the site's founders deciding to accept uploads of any type of video.[18]

According to a story that has often been repeated in the media, Hurley and Chen developed the idea for YouTube during the early months of 2005, after they had experienced difficulty sharing videos that had been shot at a dinner party at Chen's apartment in San Francisco. Karim did not attend the party and denied that it had occurred, but Chen commented that the idea that YouTube was founded after a dinner party "was probably very strengthened by marketing ideas around creating a story that was very digestible".[15]

YouTube began as a venture capital–funded technology startup, primarily from an $11.5 million investment by Sequoia Capital and an $8 million investment from Artis Capital Management between November 2005 and April 2006.[19][20] YouTube's early headquarters were situated above a pizzeria and Japanese restaurant in San Mateo, California.[21] The domain name www.youtube.com was activated on February 14, 2005, and the website was developed over the subsequent months.[22] The first YouTube video, titled Me at the zoo, shows co-founder Jawed Karim at the San Diego Zoo.[23] The video was uploaded on April 23, 2005, and can still be viewed on the site.[24] YouTube offered the public a beta test of the site in May 2005. The first video to reach one million views was a Nike advertisement featuring Ronaldinho in November 2005.[25][26] Following a $3.5 million investment from Sequoia Capital in November, the site launched officially on December 15, 2005, by which time the site was receiving 8 million views a day.[27][28]

At the time of the official launch, YouTube did not have much market recognition. It was not the first video-sharing site on the Internet, as Vimeo was launched in November 2004, though that site remained a side project of its developers from CollegeHumor at the time and did not grow much either.[29] The week of YouTube's launch, NBC-Universal's Saturday Night Live ran a skit "Lazy Sunday" by The Lonely Island. Besides helping to bolster ratings and long-term viewership for Saturday Night Live, "Lazy Sunday"'s status as an early viral video helped established YouTube as an important website.[30] Unofficial uploads of the skit to YouTube drew in more than five million collective views by February 2006 before they were removed at request of NBC-Universal about two months later, raising questions of copyright related to viral content.[31] Despite eventually being taken down, these duplicate uploads of the skit helped popularize YouTube's reach and led to the upload of further third-party content.[32][33] The site grew rapidly and, in July 2006, the company announced that more than 65,000 new videos were being uploaded every day, and that the site was receiving 100 million video views per day.[34]

The choice of the name www.youtube.com led to problems for a similarly named website, www.utube.com. The site's owner, Universal Tube & Rollform Equipment, filed a lawsuit against YouTube in November 2006 after being regularly overloaded by people looking for YouTube. Universal Tube has since changed the name of its website to www.utubeonline.com.[35][36]

Acquisition by Google (2006–2013)

On October 9, 2006, Google Inc. announced that it had acquired YouTube for $1.65 billion in Google stock,[37][38] and the deal was finalized on November 13, 2006.[39][40] Google's acquisition of YouTube launched a newfound interest in video-sharing sites; IAC which now owned Vimeo after acquiring CollegeHumor, used its asset to develop a competing site to YouTube, though focused on supporting the content creator to distinguish itself from YouTube.[29]

In March 2010, YouTube began free streaming of certain content, including 60 cricket matches of the Indian Premier League. According to YouTube, this was the first worldwide free online broadcast of a major sporting event.[41] On March 31, 2010, the YouTube website launched a new design, with the aim of simplifying the interface and increasing the time users spend on the site. Google product manager Shiva Rajaraman commented: "We really felt like we needed to step back and remove the clutter."[42] In May 2010, YouTube videos were watched more than two billion times per day.[43][44][45] This increased to three billion in May 2011,[46][47][48] and four billion in January 2012.[49][50] In February 2017, one billion hours of YouTube was watched every day.[51][52][53]

According to data published by market research company comScore, YouTube is the dominant provider of online video in the United States, with a market share of around 43% and more than 14 billion views of videos in May 2010.[54]

In October 2010, Hurley announced that he would be stepping down as chief executive officer of YouTube to take an advisory role, and that Salar Kamangar would take over as head of the company.[55] In April 2011, James Zern, a YouTube software engineer, revealed that 30% of videos accounted for 99% of views on the site.[56] In November 2011, the Google+ social networking site was integrated directly with YouTube and the Chrome web browser, allowing YouTube videos to be viewed from within the Google+ interface.[57]

In May 2011, 48 hours of new videos were uploaded to the site every minute,[49] which increased to 60 hours every minute in January 2012,[49] 100 hours every minute in May 2013,[58][59] 300 hours every minute in November 2014,[60] and 400 hours every minute in February 2017.[61] As of January 2012, the site had 800 million unique users a month.[62] It has been claimed, by The Daily Telegraph in 2008, that in 2007, YouTube consumed as much bandwidth as the entire Internet in 2000.[63] According to third-party web analytics providers, Alexa and SimilarWeb, YouTube is the second-most visited website in the world, as of December 2016; SimilarWeb also lists YouTube as the top TV and video website globally, attracting more than 15 billion visitors per month.[2][64][65] In October 2006, YouTube moved to a new office in San Bruno, California.[66]

In December 2011, YouTube launched a new version of the site interface, with the video channels displayed in a central column on the home page, similar to the news feeds of social networking sites.[67] At the same time, a new version of the YouTube logo was introduced with a darker shade of red, the first change in design since October 2006.[68]

In early March 2013, YouTube finalized the transition for all channels to the previously[when?] optional "One Channel Layout," which removed many customization options and custom background images for consistency, and split up the channel information to different tabs (Home/Feed, Videos Playlists, Discussion, About) rather than one unified page.[69]

New revenue streams (2013–ongoing)

In May 2013, YouTube launched a pilot program for content providers to offer premium, subscription-based channels within the platform.[70][71] In February 2014, Susan Wojcicki was appointed CEO of YouTube.[72] In November 2014, YouTube announced a subscription service known as "Music Key," which bundled ad-free streaming of music content on YouTube with the existing Google Play Music service.[73]

In February 2015, YouTube released a secondary mobile app known as YouTube Kids. The app is designed to provide an experience optimized for children. It features a simplified user interface, curated selections of channels featuring age-appropriate content, and parental control features.[74] Later on August 26, 2015, YouTube launched YouTube Gaming—a video gaming-oriented vertical and app for videos and live streaming, intended to compete with the Amazon.com-owned Twitch.[75]

In October 2015, YouTube announced YouTube Red (now YouTube Premium), a new premium service that would offer ad-free access to all content on the platform (succeeding the Music Key service released the previous year), premium original series and films produced by YouTube personalities, as well as background playback of content on mobile devices. YouTube also released YouTube Music, a third app oriented towards streaming and discovering the music content hosted on the YouTube platform.[76][77][78]

In January 2016, YouTube expanded its headquarters in San Bruno by purchasing an office park for $215 million. The complex has 51,468 square metres (554,000 square feet) of space and can house up to 2,800 employees.[79]

On August 29, 2017, YouTube officially launched the "polymer" redesign of its user interfaces based on Material Design language as its default, as well a redesigned logo that is built around the service's play button emblem.[80]

On April 3, 2018, a shooting took place at YouTube's headquarters in San Bruno, California.[81]

On May 17, 2018, YouTube announced the re-branding of YouTube Red as YouTube Premium (accompanied by a major expansion of the service into Canada and 13 European markets), as well as the upcoming launch of a separate YouTube Music subscription.[82]

In September 2018, YouTube began to phase out the separate YouTube Gaming website and app, and introduced a new Gaming portal within the main service. YouTube staff argued that the separate platform was causing confusion and that the integration would allow the features developed for the service (including game-based portals and enhanced discoverability of gaming-related videos and live streaming) to reach a broader audience through the main YouTube website.[83]

In July 2019, It was announced that YouTube will discontinue support for Nintendo 3DS systems on September 3, 2019. However, owners of New Nintendo 3DS, or New Nintendo 3DS XL, can still access YouTube on the Internet browser.[84]

In November 2019, it was announced that YouTube was gradually phasing out the classic version of its Creator Studio across all users by the spring of 2020.[85] As of August 2020, the classic studio is no longer available.[86]

During the COVID-19 pandemic, when most of the world was under stay-at-home orders, usage of services such as YouTube grew greatly. In response to EU officials requesting that such services reduce bandwidth as to make sure medical entities had sufficient bandwidth to share information, YouTube along with Netflix stated they would reduce streaming quality for at least thirty days as to cut bandwidth use of their services by 25% to comply with the EU's request.[87] YouTube later announced that they will continue with this move worldwide, "We continue to work closely with governments and network operators around the globe to do our part to minimize stress on the system during this unprecedented situation".[88]

In June 2020, the ability to use categories was phased out.[citation needed]

Features

Video technology

YouTube primarily uses the VP9 and H.264/MPEG-4 AVC video formats, and the Dynamic Adaptive Streaming over HTTP protocol.[89] By January 2019, YouTube had begun rolling out videos in AV1 format.[90]

Playback

Previously, viewing YouTube videos on a personal computer required the Adobe Flash Player plug-in to be installed in the browser.[91] In January 2010, YouTube launched an experimental version of the site that used the built-in multimedia capabilities of web browsers supporting the HTML5 standard.[92] This allowed videos to be viewed without requiring Adobe Flash Player or any other plug-in to be installed.[93][94] The YouTube site had a page that allowed supported browsers to opt into the HTML5 trial. Only browsers that supported HTML5 Video using the MP4 (with H.264 video) or WebM (with VP8 video) formats could play the videos, and not all videos on the site were available.[95][96]

On January 27, 2015, YouTube announced that HTML5 would be the default playback method on supported browsers. YouTube used to employ Adobe Dynamic Streaming for Flash,[97] but with the switch to HTML5 video now streams video using Dynamic Adaptive Streaming over HTTP (MPEG-DASH), an adaptive bit-rate HTTP-based streaming solution optimizing the bitrate and quality for the available network.[98]

Uploading

All YouTube users can upload videos up to 15 minutes each in duration. Users who have a good track record of complying with the site's Community Guidelines may be offered the ability to upload videos up to 12 hours in length, as well as live streams, which requires verifying the account, normally through a mobile phone.[99][100] When YouTube was launched in 2005, it was possible to upload longer videos, but a ten-minute limit was introduced in March 2006 after YouTube found that the majority of videos exceeding this length were unauthorized uploads of television shows and films.[101] The 10-minute limit was increased to 15 minutes in July 2010.[102] In the past, it was possible to upload videos longer than 12 hours. Videos can be at most 128 GB in size.[99] Video captions are made using speech recognition technology when uploaded. Such captioning is usually not perfectly accurate, so YouTube provides several options for manually entering the captions for greater accuracy.[103]

YouTube accepts videos that are uploaded in most container formats, including AVI, MP4, MPEG-PS, QuickTime File Format and FLV. It supports WebM files and also 3GP, allowing videos to be uploaded from mobile phones.[104]

Videos with progressive scanning or interlaced scanning can be uploaded, but for the best video quality, YouTube suggests interlaced videos be deinterlaced before uploading. All the video formats on YouTube use progressive scanning.[105] YouTube's statistics shows that interlaced videos are still being uploaded to YouTube, and there is no sign of that actually dwindling. YouTube attributes this to uploading of made-for-TV content.[106]

Quality and formats

YouTube originally offered videos at only one quality level, displayed at a resolution of 320×240 pixels using the Sorenson Spark codec (a variant of H.263),[107][108] with mono MP3 audio.[109] In June 2007, YouTube added an option to watch videos in 3GP format on mobile phones.[110] In March 2008, a high-quality mode was added, which increased the resolution to 480×360 pixels.[111] In December 2008, 720p HD support was added. At the time of the 720p launch, the YouTube player was changed from a 4:3 aspect ratio to a widescreen 16:9.[112] With this new feature, YouTube began a switchover to H.264/MPEG-4 AVC as its default video compression format. In November 2009, 1080p HD support was added. In July 2010, YouTube announced that it had launched a range of videos in 4K format, which allows a resolution of up to 4096×3072 pixels.[113][114] In March 2015, support for 4K resolution was added, with the videos playing at 3840 × 2160 pixels. In June 2015, support for 8K resolution was added, with the videos playing at 7680×4320 pixels.[115] In November 2016, support for HDR video was added which can be encoded with Hybrid Log-Gamma (HLG) or Perceptual Quantizer (PQ).[116] HDR video can be encoded with the Rec. 2020 color space.[117]

In June 2014, YouTube began to deploy support for high frame rate videos up to 60 frames per second (as opposed to 30 before), becoming available for user uploads in October. YouTube stated that this would enhance "motion-intensive" videos, such as video game footage.[118][119][120][121]

YouTube videos are available in a range of quality levels. The former names of standard quality (SQ), high quality (HQ), and high definition (HD) have been replaced by numerical values representing the vertical resolution of the video. The default video stream is encoded in the VP9 format with stereo Opus audio; if VP9/WebM is not supported in the browser/device or the browser's user agent reports Windows XP, then H.264/MPEG-4 AVC video with stereo AAC audio is used instead.[122]

2020 picture quality cut

On March 18, 2020, Thierry Breton, a European commissioner in charge of digital policy of the European Union urged streaming services including YouTube to limit their services. The request came as a result of the prevention of Europe's broadband networks from crashing as tens of millions of people started telecommuting, due to the COVID-19 pandemic in Europe. According to the EU, the streaming platforms should consider offering only standard definition, rather than high-definition, programs and users should be responsible for their data consumption.[123] On March 20, YouTube responded by temporarily downgrading the videos in standard definition across the EU including the traffic in the UK as well.[124]

Annotations

From 2008 to 2017, users could add "annotations" to their videos—such as pop-up text messages and hyperlinks. These functions were notably used as the basis for interactive videos, which used hyperlinks to other videos to achieve branching elements. In March 2017, it was announced that the annotations editor had been discontinued and the feature would be sunset, because their use had fallen rapidly, users had found them to be an annoyance, and because they were incompatible with mobile versions of the service. Annotations were removed entirely from all videos on January 15, 2019. YouTube had introduced standardized widgets intended to replace annotations in a cross-platform manner, including "end screens" (a customizable array of thumbnails for specified videos displayed near the end of the video) and "cards", but they are not backwards compatible with existing annotations, while the removal of annotations will also break all interactive experiences which depended on them.[125][126][127][128]

Live streaming

YouTube carried out early experiments with live streaming, including a concert by U2 in 2009, and a question-and-answer session with US President Barack Obama in February 2010.[129] These tests had relied on technology from 3rd-party partners, but in September 2010, YouTube began testing its own live streaming infrastructure.[130] In April 2011, YouTube announced the rollout of YouTube Live, with a portal page at the URL "www.youtube.com/live". The creation of live streams was initially limited to select partners.[131] It was used for real-time broadcasting of events such as the 2012 Olympics in London.[132] In October 2012, more than 8 million people watched Felix Baumgartner's jump from the edge of space as a live stream on YouTube.[133]

In May 2013, creation of live streams was opened to verified users with at least 1,000 subscribers; in August of that year the number was reduced to 100 subscribers,[134] and in December the limit was removed.[135] In February 2017, live streaming was introduced to the official YouTube mobile app. Live streaming via mobile was initially restricted to users with at least 10,000 subscribers,[136] but as of mid-2017 it has been reduced to 100 subscribers.[137] Live streams can be up to 4K resolution at 60 fps, and also support 360° video.[138] In February 2017, a live streaming feature called Super Chat was introduced, which allows viewers to donate between $1 and $500 to have their comment highlighted.[139]

3D videos

In a video posted on July 21, 2009,[140] YouTube software engineer Peter Bradshaw announced that YouTube users could now upload 3D videos. The videos can be viewed in several different ways, including the common anaglyph (cyan/red lens) method which utilizes glasses worn by the viewer to achieve the 3D effect.[141][142][143] The YouTube Flash player can display stereoscopic content interleaved in rows, columns or a checkerboard pattern, side-by-side or anaglyph using a red/cyan, green/magenta or blue/yellow combination. In May 2011, an HTML5 version of the YouTube player began supporting side-by-side 3D footage that is compatible with Nvidia 3D Vision.[144] The feature set has since been reduced, and the 3D feature currently only supports red/cyan anaglyph with no side-by-side support.

360-degree videos

In January 2015, Google announced that 360-degree video would be natively supported on YouTube. On March 13, 2015, YouTube enabled 360° videos which can be viewed from Google Cardboard, a virtual reality system. YouTube 360 can also be viewed from all other virtual reality headsets.[145] Live streaming of 360° video at up to 4K resolution is also supported.[138]

In 2017, YouTube began to promote an alternative stereoscopic video format known as VR180, which is limited to a 180-degree field of view but is promoted as being easier to produce than 360-degree video and allowing more depth to be maintained by not subjecting the video to equirectangular projection.[146]

Reels

In late November 2018, YouTube announced that it would introduce a "Story" feature, similar to ones used by Snapchat and Instagram, which would allow its content creators to engage fans without posting a full video.[147] The stories, called "Reels," would be up to 30 seconds in length and would allow users to add "filters, music, text and more, including new "YouTube-y" stickers." Unlike those of other platforms, YouTube's stories could be made multiple times and would not expire. Instead of being placed at the top of the user interface as is commonly done, the "Reels" option would be featured as a separate tab on the creator's channel.[148] As of its announcement, only certain content creators would have access to the "Reels" option, which would be utilized as a beta-version for further feedback and testing. If users engage more with the "Reels" option, it may end up as a more permanent feature and "trigger their appearance on the viewer's YouTube home page as recommendations." As of November 28, 2018, YouTube did not specify when "Reels" would arrive in Beta or when it would be publicly released.[147]

User features

Community

On September 13, 2016, YouTube launched a public beta of Community, a social media-based feature that allows users to post text, images (including GIFs), live videos and others in a separate "Community" tab on their channel.[149] Prior to the release, several creators had been consulted to suggest tools Community could incorporate that they would find useful; these YouTubers included Vlogbrothers, AsapScience, Lilly Singh, The Game Theorists, Karmin, The Key of Awesome, The Kloons, Peter Hollens, Rosianna Halse Rojas, Sam Tsui, Threadbanger and Vsauce3.[150]

After the feature has been officially released, the community post feature gets activated automatically for every channel that passes a specific threshold of subscriber counts or already has more subscribers. This threshold was lowered over time[when?], from 10000 subscribers to 1500 subscribers, to 1000 subscribers, which is the current threshold as of September 2019.[151]

Channels that the community tab becomes enabled for, get their channel discussions (name prior to March 2013 “One channel layout” redesign finalization: “channel comments”) permanently erased, instead of co-existing or migrating.[152]

Content accessibility

YouTube offers users the ability to view its videos on web pages outside their website. Each YouTube video is accompanied by a piece of HTML that can be used to embed it on any page on the Web.[153] This functionality is often used to embed YouTube videos in social networking pages and blogs. Users wishing to post a video discussing, inspired by or related to another user's video are able to make a "video response". On August 27, 2013, YouTube announced that it would remove video responses for being an underused feature.[154] Embedding, rating, commenting and response posting can be disabled by the video owner.[155]

YouTube does not usually offer a download link for its videos, and intends for them to be viewed through its website interface.[156] A small number of videos, can be downloaded as MP4 files.[157] Numerous third-party web sites, applications and browser plug-ins allow users to download YouTube videos.[158] In February 2009, YouTube announced a test service, allowing some partners to offer video downloads for free or for a fee paid through Google Checkout.[159] In June 2012, Google sent cease and desist letters threatening legal action against several websites offering online download and conversion of YouTube videos.[160] In response, Zamzar removed the ability to download YouTube videos from its site.[161]

Users retain copyright of their own work under the default Standard YouTube License,[162] but have the option to grant certain usage rights under any public copyright license they choose. Since July 2012, it has been possible to select a Creative Commons attribution license as the default, allowing other users to reuse and remix the material.[163]

Platforms

Most modern smartphones are capable of accessing YouTube videos, either within an application or through an optimized website. YouTube Mobile was launched in June 2007, using RTSP streaming for the video.[164] Not all of YouTube's videos are available on the mobile version of the site.[165] Since June 2007, YouTube's videos have been available for viewing on a range of Apple products. This required YouTube's content to be transcoded into Apple's preferred video standard, H.264, a process that took several months. YouTube videos can be viewed on devices including Apple TV, iPod Touch and the iPhone.[166] In July 2010, the mobile version of the site was relaunched based on HTML5, avoiding the need to use Adobe Flash Player and optimized for use with touch screen controls.[167] The mobile version is also available as an app for the Android platform.[168][169] In September 2012, YouTube launched its first app for the iPhone, following the decision to drop YouTube as one of the preloaded apps in the iPhone 5 and iOS 6 operating system.[170] According to GlobalWebIndex, YouTube was used by 35% of smartphone users between April and June 2013, making it the third-most used app.[171]

A TiVo service update in July 2008 allowed the system to search and play YouTube videos.[172] In January 2009, YouTube launched "YouTube for TV", a version of the website tailored for set-top boxes and other TV-based media devices with web browsers, initially allowing its videos to be viewed on the PlayStation 3 and Wii video game consoles.[173][174] In June 2009, YouTube XL was introduced, which has a simplified interface designed for viewing on a standard television screen.[175] YouTube is also available as an app on Xbox Live.[176] On November 15, 2012, Google launched an official app for the Wii, allowing users to watch YouTube videos from the Wii channel.[177] An app was available for Wii U and Nintendo 3DS, but was discontinued in August 2019.[178] Videos can also be viewed on the Wii U Internet Browser using HTML5.[179] Google made YouTube available on the Roku player on December 17, 2013,[180] and, in October 2014, the Sony PlayStation 4.[181] In November 2018, YouTube launched as a downloadable app for the Nintendo Switch.[182]

Localization

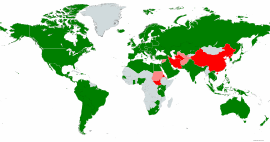

On June 19, 2007, Google CEO Eric Schmidt appeared to Paris to launch the new localization system.[183] The interface of the website is available with localized versions in 103 countries, one territory (Hong Kong) and a worldwide version.[184]

The YouTube interface suggests which local version should be chosen on the basis of the IP address of the user. In some cases, the message "This video is not available in your country" may appear because of copyright restrictions or inappropriate content.[231] The interface of the YouTube website is available in 76 language versions, including Amharic, Albanian, Armenian, Bengali, Burmese, Khmer, Kyrgyz, Laotian, Mongolian, Persian and Uzbek, which do not have local channel versions. Access to YouTube was blocked in Turkey between 2008 and 2010, following controversy over the posting of videos deemed insulting to Mustafa Kemal Atatürk and some material offensive to Muslims.[232][233] In October 2012, a local version of YouTube was launched in Turkey, with the domain youtube.com.tr. The local version is subject to the content regulations found in Turkish law.[234] In March 2009, a dispute between YouTube and the British royalty collection agency PRS for Music led to premium music videos being blocked for YouTube users in the United Kingdom. The removal of videos posted by the major record companies occurred after failure to reach agreement on a licensing deal. The dispute was resolved in September 2009.[235] In April 2009, a similar dispute led to the removal of premium music videos for users in Germany.[236]

YouTube Premium

YouTube Premium (formerly YouTube Red) is YouTube's premium subscription service. It offers advertising-free streaming, access to exclusive content, background and offline video playback on mobile devices, and access to the Google Play Music "All Access" service.[237] YouTube Premium was originally announced on November 12, 2014, as "Music Key", a subscription music streaming service, and was intended to integrate with and replace the existing Google Play Music "All Access" service.[238][239][240] On October 28, 2015, the service was relaunched as YouTube Red, offering ad-free streaming of all videos, as well as access to exclusive original content.[241][242][243] As of November 2016, the service has 1.5 million subscribers, with a further million on a free-trial basis.[244] As of June 2017, the first season of YouTube Originals had gotten 250 million views in total.[245]

In May 2014, before Music Key service was launched, the independent music trade organization Worldwide Independent Network alleged that YouTube was using non-negotiable contracts with independent labels that were "undervalued" in comparison to other streaming services, and that YouTube would block all music content from labels who do not reach a deal to be included on the paid service. In a statement to the Financial Times in June 2014, Robert Kyncl confirmed that YouTube would block the content of labels who do not negotiate deals to be included in the paid service "to ensure that all content on the platform is governed by its new contractual terms." Stating that 90% of labels had reached deals, he went on to say that "while we wish that we had [a] 100% success rate, we understand that is not likely an achievable goal and therefore it is our responsibility to our users and the industry to launch the enhanced music experience."[246][247][248][249] The Financial Times later reported that YouTube had reached an aggregate deal with Merlin Network—a trade group representing over 20,000 independent labels, for their inclusion in the service. However, YouTube itself has not confirmed the deal.[240]

On September 28, 2016, YouTube named Lyor Cohen, the co-founder of 300 Entertainment and former Warner Music Group executive, the Global Head of Music.[250]

YouTube TV

On February 28, 2017, in a press announcement held at YouTube Space Los Angeles, YouTube announced the launch of YouTube TV, an over-the-top MVPD-style subscription service that would be available for United States customers at a price of US$35 per month. Initially launching in five major markets (New York City, Los Angeles, Chicago, Philadelphia and San Francisco) on April 5, 2017,[251][252] the service offers live streams of programming from the five major broadcast networks (ABC, CBS, The CW, Fox and NBC), as well as approximately 40 cable channels owned by the corporate parents of those networks, The Walt Disney Company, CBS Corporation, 21st Century Fox, NBCUniversal and Turner Broadcasting System (including among others Bravo, USA Network, Syfy, Disney Channel, CNN, Cartoon Network, E!, Fox Sports 1, Freeform, FX and ESPN). Subscribers can also receive Showtime and Fox Soccer Plus as optional add-ons for an extra fee, and can access YouTube Premium original content (YouTube TV does not include a YouTube Red subscription).[253][254]

During the 2017 World Series (in which it was the presenting sponsor), YouTube TV ads were placed behind the home plate. The trademarked red play button logo appeared at the center of the screen, mimicking YouTube's interface.[255]

YouTube Go

YouTube Go is an Android app aimed at making YouTube easier to access on mobile devices in emerging markets. It is distinct from the company's main Android app and allows videos to be downloaded and shared with other users. It also allows users to preview videos, share downloaded videos through Bluetooth, and offers more options for mobile data control and video resolution.[256]

YouTube announced the project in September 2016 at an event in India.[257] It was launched in India in February 2017, and expanded in November 2017 to 14 other countries, including Nigeria, Indonesia, Thailand, Malaysia, Vietnam, the Philippines, Kenya, and South Africa.[258][259] It was rolled out in 130 countries worldwide, including Brazil, Mexico, Turkey, and Iraq on February 1, 2018. The app is available to around 60% of the world's population.[260][261]

YouTube Music

In early 2018, Cohen began hinting at the possible launch of YouTube's new subscription music streaming service, a platform that would compete with other services such as Spotify and Apple Music.[262] On May 22, 2018, the music streaming platform named "YouTube Music" was launched.[263][264]

ISNI

In 2018, YouTube became an ISNI registry, and announced its intention to begin creating ISNI identifiers to uniquely identify the musicians whose videos it features.[265] ISNI anticipate the number of ISNI IDs "going up by perhaps 3-5 million over the next couple of years" as a result.[266]

April Fools

YouTube featured an April Fools prank on the site on April 1 of every year from 2008 to 2016. In 2008, all links to videos on the main page were redirected to Rick Astley's music video "Never Gonna Give You Up", a prank known as "rickrolling".[267][268] The next year, when clicking on a video on the main page, the whole page turned upside down, which YouTube claimed was a "new layout".[269] In 2010, YouTube temporarily released a "TEXTp" mode which rendered video imagery into ASCII art letters "in order to reduce bandwidth costs by $1 per second."[270]

The next year, the site celebrated its "100th anniversary" with a range of sepia-toned silent, early 1900s-style films, including a parody of Keyboard Cat.[271] In 2012, clicking on the image of a DVD next to the site logo led to a video about a purported option to order every YouTube video for home delivery on DVD.[272]

In 2013, YouTube teamed up with satirical newspaper company The Onion to claim in an uploaded video that the video sharing website was launched as a contest which had finally come to an end, and would shut down for ten years before being re-launched in 2023, featuring only the winning video. The video starred several YouTube celebrities, including Antoine Dodson. A video of two presenters announcing the nominated videos streamed live for 12 hours.[273][274]

In 2014, YouTube announced that it was responsible for the creation of all viral video trends, and revealed previews of upcoming trends, such as "Clocking", "Kissing Dad", and "Glub Glub Water Dance".[275] The next year, YouTube added a music button to the video bar that played samples from "Sandstorm" by Darude.[276] In 2016, YouTube introduced an option to watch every video on the platform in 360-degree mode with Snoop Dogg.[277]

Content partnerships

In 2016, YouTube introduced a global program to develop creators whose videos produce a positive social impact. Google dedicated $1 million to this Creators for Change program.[278] The first three videos from the program premiered at the 2017 Tribeca TV Festival.[279] YouTube expanded the program in 2018.[280] YouTube also launched YouTube Space in 2012, and has currently expanded to 10 global locations. The Space gives content creators a physical location to learn about producing content as well as providing them with facilities to create content for their YouTube channels.[281]

Social impact

Both private individuals[282] and large production companies[283] have used YouTube to grow audiences. Independent content creators have built grassroots followings numbering in the thousands at very little cost or effort, while mass retail and radio promotion proved problematic.[282] Concurrently, old media celebrities moved into the website at the invitation of a YouTube management that witnessed early content creators accruing substantial followings, and perceived audience sizes potentially larger than that attainable by television.[283] While YouTube's revenue-sharing "Partner Program" made it possible to earn a substantial living as a video producer—its top five hundred partners each earning more than $100,000 annually[284] and its ten highest-earning channels grossing from $2.5 million to $12 million[285]—in 2012 CMU business editor characterized YouTube as "a free-to-use ... promotional platform for the music labels."[286] In 2013 Forbes' Katheryn Thayer asserted that digital-era artists' work must not only be of high quality, but must elicit reactions on the YouTube platform and social media.[287] Videos of the 2.5% of artists categorized as "mega", "mainstream" and "mid-sized" received 90.3% of the relevant views on YouTube and Vevo in that year.[288] By early 2013 Billboard had announced that it was factoring YouTube streaming data into calculation of the Billboard Hot 100 and related genre charts.[289]

Observing that face-to-face communication of the type that online videos convey has been "fine-tuned by millions of years of evolution," TED curator Chris Anderson referred to several YouTube contributors and asserted that "what Gutenberg did for writing, online video can now do for face-to-face communication."[290] Anderson asserted that it is not far-fetched to say that online video will dramatically accelerate scientific advance, and that video contributors may be about to launch "the biggest learning cycle in human history."[290] In education, for example, the Khan Academy grew from YouTube video tutoring sessions for founder Salman Khan's cousin into what Forbes' Michael Noer called "the largest school in the world," with technology poised to disrupt how people learn.[291] YouTube was awarded a 2008 George Foster Peabody Award,[292] the website being described as a Speakers' Corner that "both embodies and promotes democracy."[293] The Washington Post reported that a disproportionate share of YouTube's most subscribed channels feature minorities, contrasting with mainstream television in which the stars are largely white.[294] A Pew Research Center study reported the development of "visual journalism," in which citizen eyewitnesses and established news organizations share in content creation.[295] The study also concluded that YouTube was becoming an important platform by which people acquire news.[296]

YouTube has enabled people to more directly engage with government, such as in the CNN/YouTube presidential debates (2007) in which ordinary people submitted questions to U.S. presidential candidates via YouTube video, with a techPresident co-founder saying that Internet video was changing the political landscape.[297] Describing the Arab Spring (2010–2012), sociologist Philip N. Howard quoted an activist's succinct description that organizing the political unrest involved using "Facebook to schedule the protests, Twitter to coordinate, and YouTube to tell the world."[298] In 2012, more than a third of the U.S. Senate introduced a resolution condemning Joseph Kony 16 days after the "Kony 2012" video was posted to YouTube, with resolution co-sponsor Senator Lindsey Graham remarking that the video "will do more to lead to (Kony's) demise than all other action combined."[299]

Conversely, YouTube has also allowed government to more easily engage with citizens, the White House's official YouTube channel being the seventh top news organization producer on YouTube in 2012[302] and in 2013 a healthcare exchange commissioned Obama impersonator Iman Crosson's YouTube music video spoof to encourage young Americans to enroll in the Affordable Care Act (Obamacare)-compliant health insurance.[303] In February 2014, U.S. President Obama held a meeting at the White House with leading YouTube content creators to not only promote awareness of Obamacare[304] but more generally to develop ways for government to better connect with the "YouTube Generation."[300] Whereas YouTube's inherent ability to allow presidents to directly connect with average citizens was noted, the YouTube content creators' new media savvy was perceived necessary to better cope with the website's distracting content and fickle audience.[300]

Some YouTube videos have themselves had a direct effect on world events, such as Innocence of Muslims (2012) which spurred protests and related anti-American violence internationally.[305] TED curator Chris Anderson described a phenomenon by which geographically distributed individuals in a certain field share their independently developed skills in YouTube videos, thus challenging others to improve their own skills, and spurring invention and evolution in that field.[290] Journalist Virginia Heffernan stated in The New York Times that such videos have "surprising implications" for the dissemination of culture and even the future of classical music.[306]

The Legion of Extraordinary Dancers[307] and the YouTube Symphony Orchestra[308] selected their membership based on individual video performances.[290][308] Further, the cybercollaboration charity video "We Are the World 25 for Haiti (YouTube edition)" was formed by mixing performances of 57 globally distributed singers into a single musical work,[309] with The Tokyo Times noting the "We Pray for You" YouTube cyber-collaboration video as an example of a trend to use crowdsourcing for charitable purposes.[310] The anti-bullying It Gets Better Project expanded from a single YouTube video directed to discouraged or suicidal LGBT teens,[311] that within two months drew video responses from hundreds including U.S. President Barack Obama, Vice President Biden, White House staff, and several cabinet secretaries.[312] Similarly, in response to fifteen-year-old Amanda Todd's video "My story: Struggling, bullying, suicide, self-harm," legislative action was undertaken almost immediately after her suicide to study the prevalence of bullying and form a national anti-bullying strategy.[313] In May 2018, London Metropolitan Police claimed that the drill videos that talk about violence give rise to the gang-related violence. YouTube deleted 30 music videos after the complaint.[314]

Viewership

In January 2012, it was estimated that visitors to YouTube spent an average of 15 minutes a day on the site, in contrast to the four or five hours a day spent by a typical US citizen watching television.[62] In 2017, viewers on average watch YouTube on mobile devices for more than an hour every day.[315]

Revenue

Prior to 2020, Google did not provide detailed figures for YouTube's running costs, and YouTube's revenues in 2007 were noted as "not material" in a regulatory filing.[316] In June 2008, a Forbes magazine article projected the 2008 revenue at $200 million, noting progress in advertising sales.[317] In 2012, YouTube's revenue from its ads program was estimated at $3.7 billion.[318] In 2013 it nearly doubled and estimated to hit $5.6 billion according to eMarketer,[318][319] while others estimated $4.7 billion.[318] The vast majority of videos on YouTube are free to view and supported by advertising.[70] In May 2013, YouTube introduced a trial scheme of 53 subscription channels with prices ranging from $0.99 to $6.99 a month.[320] The move was seen as an attempt to compete with other providers of online subscription services such as Netflix and Hulu.[70]

Google first published exact revenue numbers for YouTube in February 2020 as part of Alphabet's 2019 financial report. According to Google, YouTube had made US$15.1 billion in ad revenue in 2019, in contrast to US$8.1 billion in 2017 and US$11.1 billion in 2018. YouTube's revenues made up nearly 10% of the total Alphabet revenue in 2019.[1][321] These revenues accounted for approximately 20 million subscribers combined between YouTube Premium and YouTube Music subscriptions, and 2 million subscribers to YouTube TV.[322]

Advertisement partnerships

YouTube entered into a marketing and advertising partnership with NBC in June 2006.[323] In March 2007, it struck a deal with BBC for three channels with BBC content, one for news and two for entertainment.[324] In November 2008, YouTube reached an agreement with MGM, Lions Gate Entertainment, and CBS, allowing the companies to post full-length films and television episodes on the site, accompanied by advertisements in a section for U.S. viewers called "Shows". The move was intended to create competition with websites such as Hulu, which features material from NBC, Fox, and Disney.[325][326] In November 2009, YouTube launched a version of "Shows" available to UK viewers, offering around 4,000 full-length shows from more than 60 partners.[327] In January 2010, YouTube introduced an online film rentals service,[328] which is only available to users in the United States, Canada, and the UK as of 2010.[329][330] The service offers over 6,000 films.[331]

Partnership with video creators

In May 2007, YouTube launched its Partner Program (YPP), a system based on AdSense which allows the uploader of the video to share the revenue produced by advertising on the site.[332] YouTube typically takes 45 percent of the advertising revenue from videos in the Partner Program, with 55 percent going to the uploader.[333][334]

There are over a million members of the YouTube Partner Program.[335] According to TubeMogul, in 2013 a pre-roll advertisement on YouTube (one that is shown before the video starts) cost advertisers on average $7.60 per 1000 views. Usually no more than half of eligible videos have a pre-roll advertisement, due to a lack of interested advertisers.[336]

YouTube policies restrict certain forms of content from being included in videos being monetized with advertising, including videos containing violence, strong language, sexual content, "controversial or sensitive subjects and events, including subjects related to war, political conflicts, natural disasters and tragedies, even if graphic imagery is not shown" (unless the content is "usually newsworthy or comedic and the creator's intent is to inform or entertain"),[337] and videos whose user comments contain "inappropriate" content.[338]

In 2013, YouTube introduced an option for channels with at least a thousand subscribers to require a paid subscription in order for viewers to watch videos.[339][340] In April 2017, YouTube set an eligibility requirement of 10,000 lifetime views for a paid subscription.[341] On January 16, 2018, the eligibility requirement for monetization was changed to 4,000 hours of watchtime within the past 12 months and 1,000 subscribers.[341] The move was seen as an attempt to ensure that videos being monetized did not lead to controversy, but was criticized for penalizing smaller YouTube channels.[342]

YouTube Play Buttons, a part of the YouTube Creator Rewards, are a recognition by YouTube of its most popular channels.[343] The trophies made of nickel plated copper-nickel alloy, golden plated brass, silver plated metal and ruby are given to channels with at least one hundred thousand, a million, ten million and fifty million subscribers, respectively.[344][345]

Revenue to copyright holders

The majority of YouTube's advertising revenue goes to the publishers and video producers who hold the rights to their videos; the company retains 45% of the ad revenue.[346] In 2010, it was reported that nearly a third of the videos with advertisements were uploaded without permission of the copyright holders. YouTube gives an option for copyright holders to locate and remove their videos or to have them continue running for revenue.[347] In May 2013, Nintendo began enforcing its copyright ownership and claiming the advertising revenue from video creators who posted screenshots of its games.[348] In February 2015, Nintendo agreed to share the revenue with the video creators.[349][350][351]

Community policy

YouTube has a set of community guidelines aimed to reduce abuse of the site's features. Generally prohibited material includes sexually explicit content, videos of animal abuse, shock videos, content uploaded without the copyright holder's consent, hate speech, spam, and predatory behavior.[352] Despite the guidelines, YouTube has faced criticism from news sources for retaining content in violation of these guidelines.

Copyrighted material

At the time of uploading a video, YouTube users are shown a message asking them not to violate copyright laws.[353] Despite this advice, many unauthorized clips of copyrighted material remain on YouTube. YouTube does not view videos before they are posted online, and it is left to copyright holders to issue a DMCA takedown notice pursuant to the terms of the Online Copyright Infringement Liability Limitation Act. Any successful complaint about copyright infringement results in a YouTube copyright strike. Three successful complaints for copyright infringement against a user account will result in the account and all of its uploaded videos being deleted.[354][355] Organizations including Viacom, Mediaset, and the English Premier League have filed lawsuits against YouTube, claiming that it has done too little to prevent the uploading of copyrighted material.[356][357][358] Viacom, demanding $1 billion in damages, said that it had found more than 150,000 unauthorized clips of its material on YouTube that had been viewed "an astounding 1.5 billion times". YouTube responded by stating that it "goes far beyond its legal obligations in assisting content owners to protect their works".[359]

During the same court battle, Viacom won a court ruling requiring YouTube to hand over 12 terabytes of data detailing the viewing habits of every user who has watched videos on the site. The decision was criticized by the Electronic Frontier Foundation, which called the court ruling "a setback to privacy rights".[360][361] In June 2010, Viacom's lawsuit against Google was rejected in a summary judgment, with U.S. federal Judge Louis L. Stanton stating that Google was protected by provisions of the Digital Millennium Copyright Act. Viacom announced its intention to appeal the ruling.[362] On April 5, 2012, the United States Court of Appeals for the Second Circuit reinstated the case, allowing Viacom's lawsuit against Google to be heard in court again.[363] On March 18, 2014, the lawsuit was settled after seven years with an undisclosed agreement.[364]

In August 2008, a US court ruled in Lenz v. Universal Music Corp. that copyright holders cannot order the removal of an online file without first determining whether the posting reflected fair use of the material. The case involved Stephanie Lenz from Gallitzin, Pennsylvania, who had made a home video of her 13-month-old son dancing to Prince's song "Let's Go Crazy", and posted the 29-second video on YouTube.[365] In the case of Smith v. Summit Entertainment LLC, professional singer Matt Smith sued Summit Entertainment for the wrongful use of copyright takedown notices on YouTube.[366] He asserted seven causes of action, and four were ruled in Smith's favor.[367]

In April 2012, a court in Hamburg ruled that YouTube could be held responsible for copyrighted material posted by its users. The performance rights organization GEMA argued that YouTube had not done enough to prevent the uploading of German copyrighted music. YouTube responded by stating:

On November 1, 2016, the dispute with GEMA was resolved, with Google content ID being used to allow advertisements to be added to videos with content protected by GEMA.[369]

In April 2013, it was reported that Universal Music Group and YouTube have a contractual agreement that prevents content blocked on YouTube by a request from UMG from being restored, even if the uploader of the video files a DMCA counter-notice. When a dispute occurs, the uploader of the video has to contact UMG.[370][371] YouTube's owner Google announced in November 2015 that they would help cover the legal cost in select cases where they believe fair use defenses apply.[372]

Content ID

In June 2007, YouTube began trials of a system for automatic detection of uploaded videos that infringe copyright. Google CEO Eric Schmidt regarded this system as necessary for resolving lawsuits such as the one from Viacom, which alleged that YouTube profited from content that it did not have the right to distribute.[373] The system, which was initially called "Video Identification"[374][375] and later became known as Content ID,[376] creates an ID File for copyrighted audio and video material, and stores it in a database. When a video is uploaded, it is checked against the database, and flags the video as a copyright violation if a match is found.[377] When this occurs, the content owner has the choice of blocking the video to make it unviewable, tracking the viewing statistics of the video, or adding advertisements to the video. By 2010, YouTube had "already invested tens of millions of dollars in this technology".[375] In 2011, YouTube described Content ID as "very accurate in finding uploads that look similar to reference files that are of sufficient length and quality to generate an effective ID File".[377] By 2012, Content ID accounted for over a third of the monetized views on YouTube.[378]

An independent test in 2009 uploaded multiple versions of the same song to YouTube, and concluded that while the system was "surprisingly resilient" in finding copyright violations in the audio tracks of videos, it was not infallible.[379] The use of Content ID to remove material automatically has led to controversy in some cases, as the videos have not been checked by a human for fair use.[380] If a YouTube user disagrees with a decision by Content ID, it is possible to fill in a form disputing the decision.[381] Prior to 2016, videos were not monetized until the dispute was resolved. Since April 2016, videos continue to be monetized while the dispute is in progress, and the money goes to whoever won the dispute.[382] Should the uploader want to monetize the video again, they may remove the disputed audio in the "Video Manager".[383] YouTube has cited the effectiveness of Content ID as one of the reasons why the site's rules were modified in December 2010 to allow some users to upload videos of unlimited length.[384]

Controversial videos

YouTube has also faced criticism over the handling of offensive content in some of its videos. The uploading of videos containing defamation, pornography, and material encouraging criminal conduct is forbidden by YouTube's "Community Guidelines".[352] YouTube relies on its users to flag the content of videos as inappropriate, and a YouTube employee will view a flagged video to determine whether it violates the site's guidelines.[352]

In an effort to limit the spread of misinformation and fake news via YouTube, it has rolled out a comprehensive policy regarding how to planned to deal with technically manipulated videos.[385]

YouTube has also been criticised for suppressing opinions dissenting from the government position, especially related to the 2019 coronavirus pandemic.[386][387][388]

Public disasters

Controversial content has included material relating to Holocaust denial and the Hillsborough disaster, in which 96 football fans from Liverpool were crushed to death in 1989.[389][390] In July 2008, the Culture and Media Committee of the House of Commons of the United Kingdom stated that it was "unimpressed" with YouTube's system for policing its videos, and argued that "proactive review of content should be standard practice for sites hosting user-generated content". YouTube responded by stating:

Imam Anwar al-Awlaki

In October 2010, U.S. Congressman Anthony Weiner urged YouTube to remove from its website videos of imam Anwar al-Awlaki.[392] YouTube pulled some of the videos in November 2010, stating they violated the site's guidelines.[393] In December 2010, YouTube added the ability to flag videos for containing terrorism content.[394]

PRISM

Following media reports about PRISM, NSA's massive electronic surveillance program, in June 2013, several technology companies were identified as participants, including YouTube. According to leaks from the program, YouTube joined the PRISM program in 2010.[395]

Restricted monetization policies

YouTube's policies on "advertiser-friendly content" restrict what may be incorporated into videos being monetized; this includes strong violence, language,[396] sexual content, and "controversial or sensitive subjects and events, including subjects related to war, political conflicts, natural disasters and tragedies, even if graphic imagery is not shown", unless the content is "usually newsworthy or comedic and the creator's intent is to inform or entertain".[397] In September 2016, after introducing an enhanced notification system to inform users of these violations, YouTube's policies were criticized by prominent users, including Phillip DeFranco and Vlogbrothers. DeFranco argued that not being able to earn advertising revenue on such videos was "censorship by a different name". A YouTube spokesperson stated that while the policy itself was not new, the service had "improved the notification and appeal process to ensure better communication to our creators".[398][399][400] Boing Boing reported in 2019 that LGBT keywords resulted in demonetization.[401]

Advertiser mass boycott

In March 2017, the government of the United Kingdom pulled its advertising campaigns from YouTube, after reports that its ads had appeared on videos containing extremist content. The government demanded assurances that its advertising would "be delivered in a safe and appropriate way". The Guardian newspaper, as well as other major British and U.S. brands, similarly suspended their advertising on YouTube in response to their advertising appearing near offensive content. Google stated that it had "begun an extensive review of our advertising policies and have made a public commitment to put in place changes that give brands more control over where their ads appear".[402][403] In early April 2017, the YouTube channel h3h3Productions presented evidence claiming that a Wall Street Journal article had fabricated screenshots showing major brand advertising on an offensive video containing Johnny Rebel music overlaid on a Chief Keef music video, citing that the video itself had not earned any ad revenue for the uploader. The video was retracted after it was found that the ads had actually been triggered by the use of copyrighted content in the video.[404][405]

On April 6, 2017, YouTube announced that in order to "ensure revenue only flows to creators who are playing by the rules", it would change its practices to require that a channel undergo a policy compliance review, and have at least 10,000 lifetime views, before they may join the Partner Program.[406]

Logan Paul corpse scandal

In January 2018, YouTube creator Logan Paul faced criticism for a video he had uploaded from a trip to Japan, where he encountered a body of a suicide death in the Aokigahara forest. The corpse was visible in the video, although its face was censored. The video proved controversial due to its content, with its handling of the subject matter being deemed insensitive by critics. On January 10—eleven days after the video was published—YouTube announced that it would cut Paul from the Google Preferred advertising program. Six days later, YouTube announced tighter thresholds for the partner program to "significantly improve our ability to identify creators who contribute positively to the community", under which channels must have at least 4,000 hours of watch time within the past 12 months and at least 1,000 subscribers. YouTube also announced that videos approved for the Google Preferred program would become subject to manual review, and that videos would be rated based on suitability (with advertisers allowed to choose).[407][408][409]

These changes led to further criticism of YouTube from independent channels, who alleged that the service had been changing its algorithms to give a higher prominence to professionally produced content (such as celebrities, music videos and clips from late night talk shows), that attract wide viewership and has a lower risk of alienating mainstream advertisers, at the expense of the creators that had bolstered the service's popularity.[410][411]

Conspiracy theories and fringe discourse

YouTube has been criticized for using an algorithm that gives great prominence to videos that promote conspiracy theories, falsehoods and incendiary fringe discourse.[412][413][414] According to an investigation by The Wall Street Journal, "YouTube’s recommendations often lead users to channels that feature conspiracy theories, partisan viewpoints and misleading videos, even when those users haven’t shown interest in such content. When users show a political bias in what they choose to view, YouTube typically recommends videos that echo those biases, often with more-extreme viewpoints."[412][415] When users search for political or scientific terms, YouTube's search algorithms often give prominence to hoaxes and conspiracy theories.[414][416] After YouTube drew controversy for giving top billing to videos promoting falsehoods and conspiracy when people made breaking-news queries during the 2017 Las Vegas shooting, YouTube changed its algorithm to give greater prominence to mainstream media sources.[412][417][418][419] In 2018, it was reported that YouTube was again promoting fringe content about breaking news, giving great prominence to conspiracy videos about Anthony Bourdain's death.[420]

In 2017, it was revealed that advertisements were being placed on extremist videos, including videos by rape apologists, anti-Semites and hate preachers who received ad payouts.[421] After firms started to stop advertising on YouTube in the wake of this reporting, YouTube apologized and said that it would give firms greater control over where ads got placed.[421]

Alex Jones, known for right-wing conspiracy theories, had built a massive audience on YouTube.[422] YouTube drew criticism in 2018 when it removed a video from Media Matters compiling offensive statements made by Jones, stating that it violated its policies on "harassment and bullying".[423] On August 6, 2018, however, YouTube removed Alex Jones' YouTube page following a content violation.[424]

University of North Carolina professor Zeynep Tufekci has referred to YouTube as "The Great Radicalizer", saying "YouTube may be one of the most powerful radicalizing instruments of the 21st century."[425] Jonathan Albright of the Tow Center for Digital Journalism at Columbia University described YouTube as a "conspiracy ecosystem".[414][426]

In January 2019, YouTube said that it had introduced a new policy starting in the United States intended to stop recommending videos containing "content that could misinform users in harmful ways." YouTube gave flat earth theories, miracle cures, and 9/11 trutherism as examples.[427] Efforts within YouTube engineering to stop recommending borderline extremist videos falling just short of forbidden hate speech, and track their popularity were originally rejected because they could interfere with viewer engagement.[428] In late 2019, the site began implementing measures directed towards "raising authoritative content and reducing borderline content and harmful misinformation."[429]

A July 2019 study based on ten YouTube searches using the Tor Browser related to the climate and climate change, the majority of videos were videos that communicated views contrary to the scientific consensus on climate change.[430]

A 2019 BBC investigation of YouTube searches in ten different languages found that YouTube's algorithm promoted health misinformation, including fake cancer cures.[431] In Brazil, YouTube has been linked to pushing pseudoscientific misinformation on health matters, as well as elevated far-right fringe discourse and conspiracy theories.[432]

Following the dissemination via YouTube of misinformation related to the COVID-19 pandemic that 5G communications technology was responsible for the spread of coronavirus disease 2019 which led to numerous 5G towers in the United Kingdom to be destroyed, YouTube removed all such videos linking 5G and the coronavirus in this manner.[433]

Hateful content

Prior to 2019, YouTube has taken steps to remove specific videos or channels related to supremacist content that had violated its acceptable use policies, but otherwise did not have site-wide policies against hate speech.[434]

In the wake of the March 2019 Christchurch mosque attacks, YouTube and other sites like Facebook and Twitter that allowed user-submitted content drew criticism for doing little to moderate and control the spread of hate speech, which was considered to be a factor in the rationale for the attacks.[435][436] These platforms were pressured to remove such content, but in an interview with The New York Times, YouTube's chief product officer Neal Mohan said that unlike content such as ISIS videos which take a particular format and thus easy to detect through computer-aided algorithms, general hate speech was more difficult to recognize and handle, and thus could not readily take action to remove without human interaction.[437]

YouTube joined an initiative led by France and New Zealand with other countries and tech companies in May 2019 to develop tools to be used to block online hate speech and to develop regulations, to be implemented at the national level, to be levied against technology firms that failed to take steps to remove such speech, though the United States declined to participate.[438][439] Subsequently, on June 5, 2019, YouTube announced a major change to its terms of service, "specifically prohibiting videos alleging that a group is superior in order to justify discrimination, segregation or exclusion based on qualities like age, gender, race, caste, religion, sexual orientation or veteran status." YouTube identified specific examples of such videos as those that "promote or glorify Nazi ideology, which is inherently discriminatory". YouTube further stated it would "remove content denying that well-documented violent events, like the Holocaust or the shooting at Sandy Hook Elementary, took place."[434][440]

In June 2020, YouTube banned several channels associated with white supremacy, including those of Stefan Molyneux, David Duke, and Richard B. Spencer, asserting these channels violated their policies on hate speech. The banned occurred the same day that Reddit announced the ban on several hate speech sub-forums including r/The_Donald.[441]

Child protection

Leading into 2017, there was a significant increase in the number of videos related to children, coupled between the popularity of parents vlogging their family's activities, and previous content creators moving away from content that often was criticized or demonetized into family-friendly material. In 2017, YouTube reported that time watching family vloggers had increased by 90%.[442][443] However, with the increase in videos featuring children, the site began to face several controversies related to child safety. During Q2 2017, the owners of popular channel DaddyOFive, which featured themselves playing "pranks" on their children, were accused of child abuse. Their videos were eventually deleted, and two of their children were removed from their custody.[444][445][446][447] A similar case happened in 2019 when the owners of the channel Fantastic Adventures was accused of abusing her adopted children. Her videos would later be deleted.[448]

Later that year, YouTube came under criticism for showing inappropriate videos targeted at children and often featuring popular characters in violent, sexual or otherwise disturbing situations, many of which appeared on YouTube Kids and attracted millions of views. The term "Elsagate" was coined on the Internet and then used by various news outlets to refer to this controversy.[449][450][451][452] On November 11, 2017, YouTube announced it was strengthening site security to protect children from unsuitable content. Later that month, the company started to mass delete videos and channels that made improper use of family friendly characters. As part as a broader concern regarding child safety on YouTube, the wave of deletions also targeted channels which showed children taking part in inappropriate or dangerous activities under the guidance of adults. Most notably, the company removed Toy Freaks, a channel with over 8.5 million subscribers, that featured a father and his two daughters in odd and upsetting situations.[453][453][454][455][456][457] According to analytics specialist SocialBlade, it earned up to £8.7 million annually prior to its deletion.[458]

Even for content that appears to aimed at children and appears to contain only child-friendly content, YouTube's system allows for anonymity of who uploads these videos. These questions have been raised in the past, as YouTube has had to remove channels with children's content which, after becoming popular, then suddenly include inappropriate content masked as children's content.[459] Alternative, some of the most-watched children's programming on Youtube comes from channels who have no identifiable owners, raising concerns of intent and purpose. One channel that had been of concern was "Cocomelon" which provided numerous mass-produced animated videos aimed at children. Up through 2019, it had drawn up to US$10 million a month in ad revenue, and was one of the largest kid-friendly channels on YouTube prior to 2020. Ownership of Cocomelon was unclear outside of its ties to "Treasure Studio", itself an unknown entity, raising questions as to the channel's purpose,[459][460][461] but Bloomberg News had been able to confirm and interview the small team of American owners in February 2020 regarding "Cocomelon", who stated their goal for the channel was to simply entertain children, wanting to keep to themselves to avoid attention from outside investors.[462] The anonymity of such channel raise concerns because of the lack of knowledge of what purpose they are trying to serve.[463] The difficulty to identify who operates these channels "adds to the lack of accountability", according to Josh Golin of the Campaign for a Commercial-Free Childhood, and educational consultant Renée Chernow-O’Leary found the videos were designed to entertain with no intent to educate, all leading to both critics and parents to be concerns for their children becoming too enraptured by the content from these channels.[459] Content creators that earnestly make kid-friendly videos have found it difficult to compete with larger channels like ChuChu TV, unable to produce content at the same rate as these large channels, and lack the same means of being promoted through YouTube's recommendation algorithms that the larger animated channel networks have shared.[463]

In January 2019, YouTube officially banned videos containing "challenges that encourage acts that have an inherent risk of severe physical harm" (such as, for example, the Tide Pod Challenge), and videos featuring pranks that "make victims believe they're in physical danger" or cause emotional distress in children.[464]

Sexualization of children

Also in November 2017, it was revealed in the media that many videos featuring children – often uploaded by the minors themselves, and showing innocent content such as the children playing with toys or performing gymnastics – were attracting comments from pedophiles[465][466] with predators finding the videos through private YouTube playlists or typing in certain keywords in Russian.[466] Other child-centric videos originally uploaded to YouTube began propagating on the dark web, and uploaded or embedded onto forums known to be used by pedophiles.[467]

As a result of the controversy, which added to the concern about "Elsagate", several major advertisers whose ads had been running against such videos froze spending on YouTube.[452][468] In December 2018, The Times found more than 100 grooming cases in which children were manipulated into sexually implicit behavior (such as taking off clothes, adopting sexualised poses and touching other children inappropriately) by strangers.[469] After a reporter flagged the videos in question, half of them were removed, and the rest were removed after The Times contacted YouTube's PR department.[469]

In February 2019, YouTube vlogger Matt Watson identified a "wormhole" that would cause the YouTube recommendation algorithm to draw users into this type of video content, and make all of that user's recommended content feature only these types of videos. Most of these videos had comments from sexual predators commenting with timestamps of when the children were shown in compromising positions, or otherwise making indecent remarks. In some cases, other users had reuploaded the video in unlisted form but with incoming links from other videos, and then monetized these, propagating this network.[470] In the wake of the controversy, the service reported that they had deleted over 400 channels and tens of millions of comments, and reported the offending users to law enforcement and the National Center for Missing and Exploited Children. A spokesperson explained that "any content — including comments — that endangers minors is abhorrent and we have clear policies prohibiting this on YouTube. There's more to be done, and we continue to work to improve and catch abuse more quickly."[471][472] Despite these measures, AT&T, Disney, Dr. Oetker, Epic Games, and Nestlé all pulled their advertising from YouTube.[470][473]

Subsequently, YouTube began to demonetize and block advertising on the types of videos that have drawn these predatory comments. The service explained that this was a temporary measure while they explore other methods to eliminate the problem.[474] YouTube also began to flag channels that predominantly feature children, and preemptively disable their comments sections. "Trusted partners" can request that comments be re-enabled, but the channel will then become responsible for moderating comments. These actions mainly target videos of toddlers, but videos of older children and teenagers may be protected as well if they contain actions that can be interpreted as sexual, such as gymnastics. YouTube stated it was also working on a better system to remove comments on other channels that matched the style of child predators.[475][476]